How to Enable and Configure Cluster Autoscaler in Amazon EKS for Dynamic Node Scaling

Introduction

In Kubernetes, efficient resource utilization is critical for handling workloads dynamically. Amazon EKS (Elastic Kubernetes Service) provides a managed Kubernetes platform, but scaling nodes automatically isn't enabled by default. The Cluster Autoscaler solves this problem by dynamically adjusting the number of nodes in your cluster based on workload demands.

This guide walks you through the step-by-step process of enabling and configuring the Cluster Autoscaler in an Amazon EKS cluster to handle dynamic node scaling.

Why Do You Need Cluster Autoscaler?

In Kubernetes, efficient resource utilization is crucial for handling workloads dynamically. Amazon EKS provides a managed Kubernetes platform, but node scaling isn't automatic by default.

Default Behavior in EKS

EKS clusters don’t automatically scale nodes.

Workloads may remain Pending if there aren’t enough resources.

Scenario Without Autoscaler

EKS cluster has 2 nodes.

You deploy a workload with 3 replicas.

2 Pods are running, while 1 Pod stays Pending due to insufficient resources.

How Cluster Autoscaler Helps

Scales up nodes when Pods are unscheduled.

Scales down underutilized nodes to save costs.

Ensures all workloads have the resources they need automatically.

By enabling the Cluster Autoscaler, your EKS cluster dynamically adjusts node count based on workload demands, optimizing resource utilization and cost-efficiency.

This guide will walk you through the configuration step by step.

You deploy a workload with 3 replicas.

2 Pods are running, while 1 Pod stays Pending due to insufficient resources.

IAM Policies for Cluster Autoscaler

The Cluster Autoscaler needs certain permissions to interact with Amazon EKS resources. These permissions should be set up following the official Kubernetes Cluster Autoscaler AWS documentation. Here's the step-by-step process:

Create the Required IAM Policy

The IAM policy provides the necessary permissions for Cluster Autoscaler. Use the JSON provided below:

Step 1: Create an IAM Policy for Cluster Autoscaler

- Open the AWS Management Console → IAM → Policies → Create Policy.

Switch to the JSON tab and paste the following policy:

{ "Version": "2012-10-17", "Statement": [ { "Effect": "Allow", "Action": [ "autoscaling:DescribeAutoScalingGroups", "autoscaling:DescribeAutoScalingInstances", "autoscaling:DescribeLaunchConfigurations", "autoscaling:DescribeScalingActivities", "ec2:DescribeImages", "ec2:DescribeInstanceTypes", "ec2:DescribeLaunchTemplateVersions", "ec2:GetInstanceTypesFromInstanceRequirements", "eks:DescribeNodegroup" ], "Resource": ["*"] }, { "Effect": "Allow", "Action": [ "autoscaling:SetDesiredCapacity", "autoscaling:TerminateInstanceInAutoScalingGroup" ], "Resource": ["*"] } ] }

Step 2:Review and Name the IAM Policy

After pasting the JSON policy into the JSON tab in the AWS Console:

- Click Next to proceed to the review step.

On the Review policy page:

Enter a meaningful name for the policy.

For example: EKS-cluster-auto-scaler-policy

This name helps identify the policy as specific to the Cluster Autoscaler.

Step 3:Attach the Policy

Once the policy is created, you can attach it to the required roles (EKS Cluster Role and Node Group Role) as described earlier.

You need to attach this policy to:

The IAM Role used by your EKS Cluster.

The IAM Role used by your Node Groups (Auto Scaling Groups).

Steps to Attach:

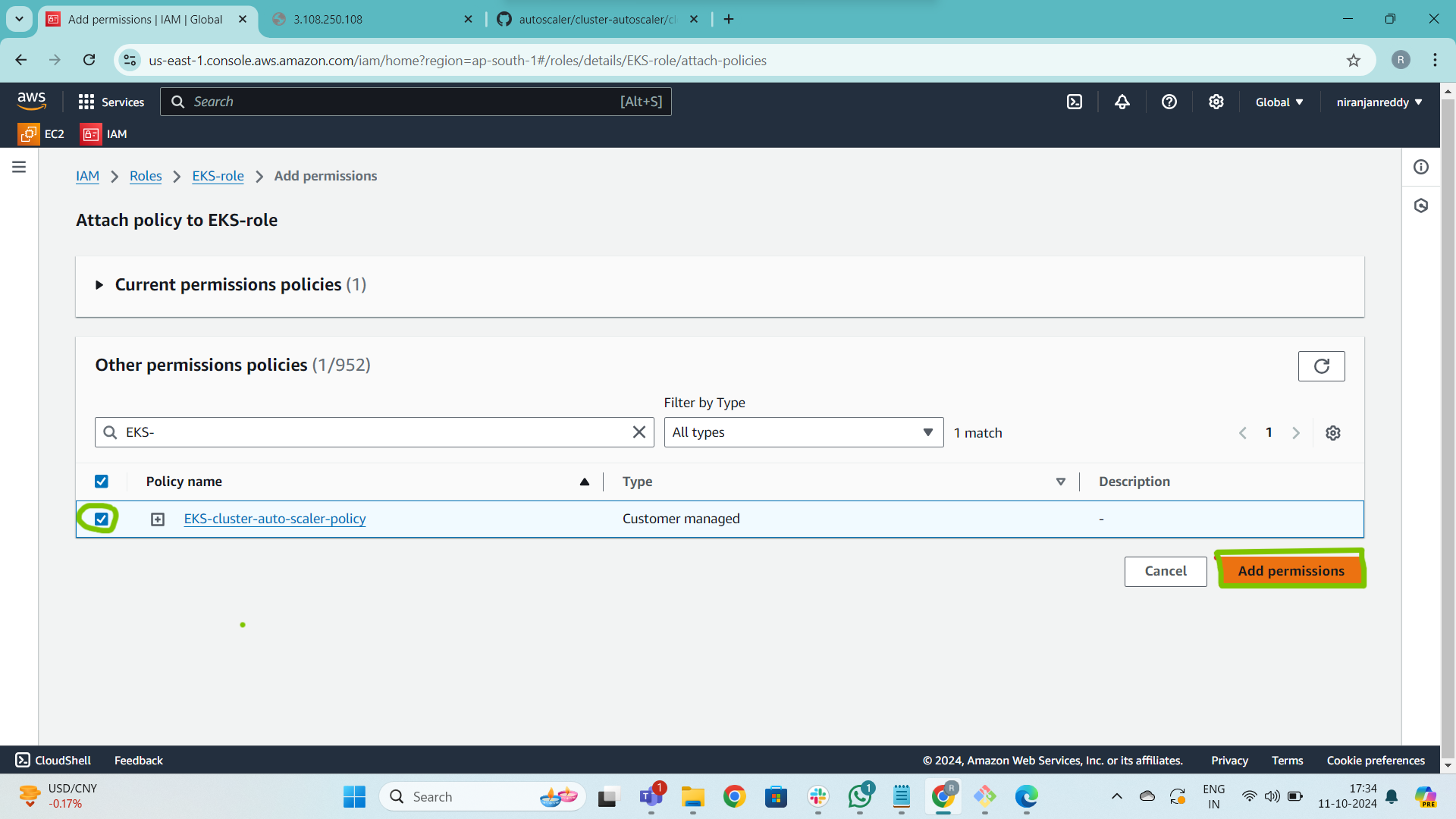

Attach Policy to the EKS Cluster Role

Navigate to the AWS Management Console.

Go to IAM → Roles.

Search for and select your EKS Cluster Role.

In my case: The EKS Cluster Role is named

EKS-role.Click Add permissions → Attach policies.

Search for the policy you created earlier (e.g., EKS-cluster-auto-scaler-policy).

Select the policy and click Add Permission.

Attach Policy to the Node Group Role

Return to IAM → Roles.

Search for and select your Node Group Role.

In my case: The Node Group Role is named

eks-worker-role.Click Add permissions → Attach policies.

5. Search for the policy you created earlier (e.g.,EKS-cluster-auto-scaler-policy).

- Select the policy and click Add Permission.

Final Step: Apply the Cluster Autoscaler YAML

After attaching the IAM policies to the EKS Cluster Role and Node Group Role, you need to deploy the Cluster Autoscaler to your cluster using a YAML file. This file configures the Cluster Autoscaler to interact with your node group and enables scaling functionality.

Steps to Apply the YAML:

Visit the official GitHub repository: Cluster Autoscaler on AWS.

- Locate the

cluster-autoscaler-autodiscover.yamlexample.

- Locate the

- Edit the YAML file:

- Update the

--node-group-auto-discoveryflag with your cluster’s name and tags.

For example: EKS-Demo

Apply the YAML file to your cluster:

kubectl apply -f cluster-autoscaler-autodiscover.yamlVerify the Deployment Check if the Cluster Autoscaler Pod is running:

kubectl get pods -n kube-system

After Applying Cluster Autoscaler:

The Cluster Autoscaler detected the unscheduled Pod.

It automatically launched a new node in the node group to meet the resource requirements.

Once the node was ready, the 3rd Pod transitioned to Running state

Verify logs to confirm it is working:

How Cluster Autoscaler Logs Reflect Scaling Actions

The Cluster Autoscaler dynamically manages the number of nodes in your cluster based on Pod requirements. Let’s explore how to check the logs and understand its behavior during scaling events:

Scenario 1: Scaling Up (Pods Need More Resources)

When workloads demand more resources (e.g., higher replicas or traffic increases):

What Happens:

The Cluster Autoscaler detects unscheduled Pods.

It calculates the number of additional nodes required.

New nodes are provisioned in the node group to accommodate the workloads.

Check the Logs:

To view scaling actions in the logs, run:Look for entries similar to:

[ScaleUp] Pod <pod-name> is unscheduled. Triggering scale-up. [ScaleUp] Adding X node(s) to the node group <node-group-name>.

Scenario 2: Maintaining Desired Pods (No Scaling Action Required)

When the workload demand is steady, Cluster Autoscaler ensures that the cluster runs with the required resources without scaling up or down unnecessarily.

What Happens:

The cluster maintains the desired Pods and nodes.

If the traffic remains constant and no replicas are added, no changes are made.

Logs Indication:

[NoScale] All Pods are scheduled. No scaling action required.

Scenario 3: Scaling Down (Traffic Decreases)

When workload traffic reduces or replicas are scaled down manually:

What Happens:

Cluster Autoscaler identifies underutilized nodes.

It calculates whether those nodes can be removed safely without affecting scheduled Pods.

Underutilized nodes are terminated to optimize costs.

Check the Logs:

Logs for scale-down actions:[ScaleDown] Node <node-name> is underutilized. Terminating node. [ScaleDown] Removing X node(s) from the node group <node-group-name>.

Example Scenarios with Actions

Scaling Up Example:

Current State: 2 nodes, 3 replicas → 1 Pod Pending.

Autoscaler Action: Adds 1 node.

Logs Indication: Scale-up triggered to schedule pending Pod.

Scaling Down Example:

Current State: 3 nodes, replicas reduced to 2.

Autoscaler Action: Identifies 1 node as unnecessary and scales it down.

Logs Indication: Scale-down initiated to terminate the extra node.

Key Insights:

Cluster Autoscaler dynamically maintains optimal resource utilization.

It adjusts nodes based on real-time traffic and the desired state of Pods.

For low traffic, the cluster scales down to save costs; for high traffic, it scales up to ensure workloads are handled efficiently.

This results in a cost-effective, resilient, and well-optimized cluster.

Conclusion:

"Cluster Autoscaler simplifies workload management in Amazon EKS by dynamically adjusting the number of nodes based on demand. It ensures optimal resource utilization, reduces costs, and maintains application performance during both high and low traffic periods. Follow this guide to enable Autoscaler in your EKS cluster and achieve efficient, automated scaling."